We quickly attracted customers like Amazon, Walmart, Yandex, and major market news aggregators. Rather than passing in individual URLs, Crawlbot enabled our customers to ask questions like “let me know about all price changes across Target, Macys, JCrew, GAP, and 100 other retailers” or “let me build a news aggregator for my industry vertical”. Crawlbot worked as a cloud-managed search engine, crawling entire domains, feeding the urls into our automatic analysis technology, and returning entire structured databases.Ĭrawlbot allowed us to grow the market a bit beyond an individual developer tool to businesses that were interested in market intelligence, whether about products, news aggregation, online discussion, or their own properties. We productized this as a product called Crawlbot, which allowed our customers to create their own custom crawls of sites, by providing a set of domains to crawl. We integrated the Gigablast technology into Diffbot, essentially adding a highly optimized web rendering engine and our automatic classification and extraction technology to Gigablast’s spidering, storage, search, and indexing technology.

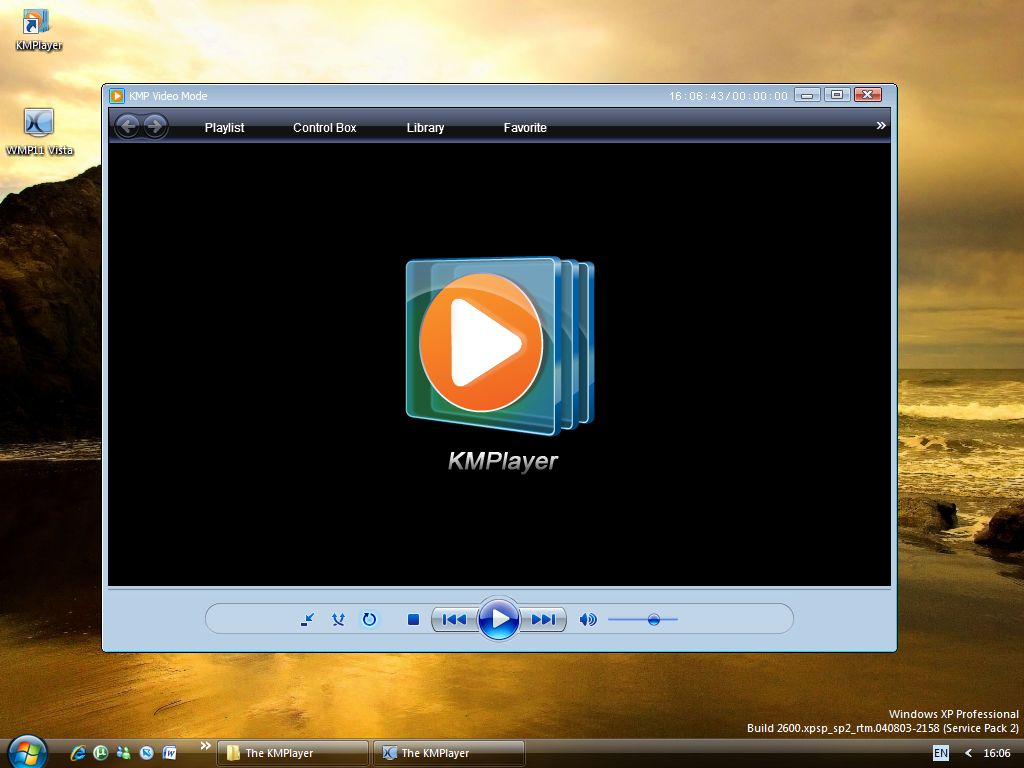

Difebot mkplayer how to#

Fortunately for us, this meant that we did not have to expend significant resources in learning how to operate a production crawl of the web, and could focus on the task of making meaning out of the web pages.

Difebot mkplayer code#

His team had written over half a million lines of C++ code to work out many of the edge-cases required to crawl the 99.999% of the web. Matt had competed against Google in the first search wars in the mid-2000s (remember when there were multiple search engines?), had achieved a comparably-sized web index, with real-time search, with a much smaller team and hardware infrastructure. Our next big break came when we met Matt Wells, the founder of the Gigablast search engine, who we hired as our VP of Search.

Difebot mkplayer software#

This niche market (the set of software developers that have a bunch of URLs to analyze) provided us a proving grounds for our technology and allowed us to build a profitable company around advancing the state-of-the-art in automated information extraction. Diffbot quickly powered apps like AOL, Instapaper, Snapchat, DuckDuckGo, and Bing, who used Diffbot to turn their URLs into structured information about articles, products, images, and discussion entities.

For many kinds of web applications automatically extracting structure from arbitrary URLs works 10X better compared to the approach of manually creating scraping rules for each site and maintaining these rulesets. We launched this as a paid API on Hacker News for developers, which meant that the only way we would survive was if the technology provided something of value that was better than what could be produced in-house or by off-the-shelf solutions. We started perfecting the technology to automatically render and extract structured data from a single page, starting with article pages, and moving on to all the major kinds of pages on the web. So, we just decided to start developing the technology anyways, but without crawling the web. Bing was spending upwards of $1B per quarter to maintain a fast-follower position.Īs a bootstrapped startup starting out at this time, we didn’t have the resources to crawl the whole web nor were we willing to burn a large amount of investors’ money before proving the technology to ourselves. Even Yahoo eventually got out of the web crawling business, effectively outsourcing their crawl to Bing. However they were never able to build technology that is 10X better before resources ran out. Many of those startups in the late-2000s all raised large amounts of money with no more than an idea and a team to try to build a better Google. Crawling the web is capital intensive stuff, and many a well-funded startup and large company have gone bust trying to do so. However, as a small startup, we couldn’t crawl the web on day one. We believe that the only approach that can scale and make use of all of human knowledge is an autonomous system that can read and understand all of the documents on the public web. Our mission at Diffbot is to build the world’s first comprehensive map of human knowledge, which we call the Diffbot Knowledge Graph.

0 kommentar(er)

0 kommentar(er)